In IWCLUL talks, Miikka Silfverberg’s mention of collecting words from Wikipedia resonated with my earlier experiences working with Wikipedia dumps, especially the difficulty of it. I talked with some people at the conference and everyone seemed to agree that processing Wikipedia dumps takes a lot of time, which they could spend for something else. I am considering to publish plain text Wikipedia dumps and word frequency lists. While working in the DigiSami project, I familiarized myself with the utilities as well as the Wikimedia Tool Labs, so relatively little effort would be needed. The research value would be low, but it would be worth it, if enough people find these dumps and save time. A recent update is that Parsoid is planning to provide plain text format, so this is likely to become even easier in the future. Still, there might be some work to do collect pages into one archive and decide which parts of page will stay and which will be removed: for example converting an infobox to collection of isolated words is not useful for use cases such as WikiTalk, and it can also easily skew word frequencies.

I talked with Sjur Moshagen about keyboards for less resourced languages. Nowadays they have keyboards for Android and iOS, in addition to keyboards for computers (which already existed). They have some impressing additional features, like automatically adding missing accents to typed words. That would be too complicated to implement in jquery.ime, a project used by Wikimedia that implements keyboards in a browser. At least the aforementioned example uses finite state transducer. Running finite state tools in the browser does not yet feel realistic, even though some solutions exist*. The alternative of making requests to a remote service would slow down typing, except perhaps with some very clever implementation, which would probably be fragile at best. I have still to investigate whether there is some middle ground to bring the basic keyboard implementations to jquery.ime.

*Such as jsfst. One issue is that the implementations and the transducers themselves can take lot of space, which means we will run into same issues as when distributing large web fonts at Wikipedia.

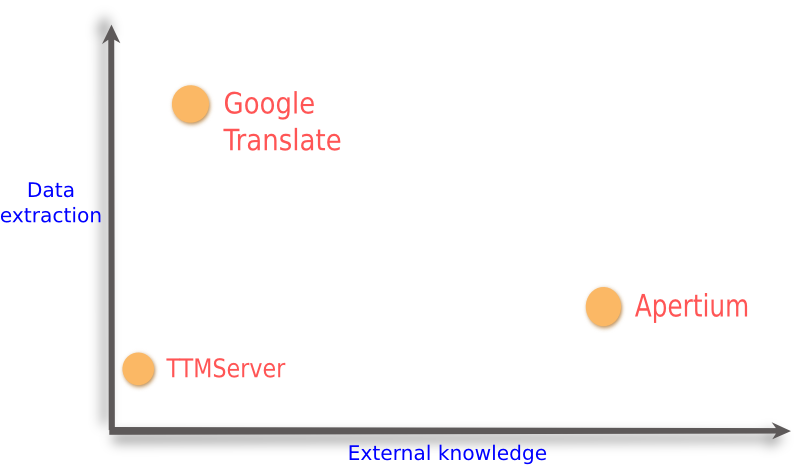

I spoke with Tommi Pirinen and Antti Kanner about implementing a dictionary application programming interface (API) for the Bank of Finnish Terminology in Arts and Sciences (BFT). That would allow direct use of BFT resources in translation tools like translatewiki.net and Wikimedia’s Content Translation project. It would also help indirectly, by using a dump for extending word lists in the Apertium machine translation software.

I spoke briefly about language identification with Tommi Jauhiainen who had a poster presentation about the project “The Finno-Ugric languages and the internet”. I had implemented one language detector myself, using an existing library. Curiously enough, many other people met in Wikimedia circles have also made their own implementations. Mine had severe problems classifying languages which are very close to each other. Tommi gave me a link for another language detector, which I would like to test in the future to compare its performance with previous attempts. We also talked about something I call “continuous” language identification, where the detector would detect parts of running text which are in a different language. A normal language detector will be useful for my open source translation memory service project, called InTense. Continuous language identification could be used to post-process Wikipedia articles and tag foreign text so that correct fonts are applied, and possibly also in WikiTalk-like applications, to provide the text-to-speech (TTS) with a hint on how to pronounce those words.

Reasonator is a software that generates visually pleasing summary pages in natural language and structured sections, based on structured data. More specifically, it uses Wikidata, which is the Wikimedia structured data project, developed by Wikimedia Germany. Reasonator works primarily for persons, though other types or subjects are being developed. Its localisation is limited, compared to the about three hundred languages of MediaWiki. Translating software which generates natural language sentences dynamically is very different from the usual software translation, which consists mostly of fixed strings with occasional placeholder which is replaced dynamically when showing text to an user.

Reasonator is a software that generates visually pleasing summary pages in natural language and structured sections, based on structured data. More specifically, it uses Wikidata, which is the Wikimedia structured data project, developed by Wikimedia Germany. Reasonator works primarily for persons, though other types or subjects are being developed. Its localisation is limited, compared to the about three hundred languages of MediaWiki. Translating software which generates natural language sentences dynamically is very different from the usual software translation, which consists mostly of fixed strings with occasional placeholder which is replaced dynamically when showing text to an user.

It is not a new idea to use grammatical framework (GF), which is a language translation software based on interlingua, for Reasonator. In fact I had proposed this earlier in private discussions to Gerard Meijssen, but this conference renewed my interest in the idea, as I attended the GF workshop held by Aarne Ranta, Inari Listenmaa and Francis Tyers. GF seems to be a good fit here, as it allows limited context and limited vocabulary translation to many languages simultaneously; vice versa, Wikidata will contain information like gender of people, which can be fed to GF to get proper grammar in the generated translations. It would be very interesting to have a prototype of a Reasonator-like software using GF as the backend. The downside of GF is that (I assume) it is not easy for our regular translators to work with, so work is needed to make it easier and more accessible. The hypothesis is that with GF backend we would get a better language support (as in grammatically correct and flexible) with less effort on the long run. That would mean providing access to all the Wikidata topics even in smaller languages, without the effort of manually writing articles.