I attended the International workshop series on spoken dialog systems aka IWSDS as part of my studies. It was my first scientific event where one had to submit papers: a great experience. More details below.

Poster. The paper we had submitted to the workshop was accepted as poster presentation. Having never done a poster before, especially of A0 size, I was a little bit afraid that I would encounter many technical and design issues. But no time for procrastination: little more than a week before the conference, I just started doing it. Luckily my University provided poster templates, so I didn’t need to worry about general layout. Between Adobe InDesign and PowerPoint templates, I chose the latter because I felt that I didn’t want to learn new software but just want to get things done. It took a few hours to come up with the initial draft. During the following days I went through 19 versions with my advisers until we declared it as ready. Working on weekends and late nights is nothing new to me, but I was surprised my advisers did the same. Whether this tells about dedication, short deadlines or the general issue of work spreading over to free time, I do not know.

Travel. I sent the poster to print, and got it the day before I flew in to San Francisco. My first leg was late, so I had to run in New York to catch my connection. I was advised to include the poster in check-in luggage, so of course it did not make it. That wasn’t an issue though, since I had decided to fly in one day early to recover from jet lag. The next day I went back to the airport to pick the poster and take my shuttle to the venue, leaving from SFO.

Location. The workshop was held in an inn in near Napa. I was told jokingly that the isolated place was chosen so that we can’t go out but only stay together to discuss about things. There was probably some truth in that. As a counterbalance for the packed conference program, the place and good food made it not overwhelming.

Presentations. The level of presentations varied somewhat. On one end of the scale there was a presentation which focused on math which I could not grasp. On the other end both Dan Bohus and Louis-Philippe Morency gave captivating keynotes which sparked my interest and were easy to follow even for a complete newbie to the topic as I was.

What struck me the most were Dan’s words about situated interaction. He gave an example where they had a robot in the office, and they were creating algorithms to guess whether the person walking past the robot is going to engage with the robot. They were able to use machine learning to guess many seconds in advance whether the user will engage. But when they moved the robot a bit so that users approached from a different direction, the previous model did not work anymore, and they had to retrain it with new data. The point of this and other given examples was to highlight that, in the context of human interaction, we must devise machine-learning algorithms capable of adapting to new contexts. I immediately draw a connection to the presentation by Filip Ginter (p. 28) at Kites Symposium last year, where data mining was used for distributional semantics, i.e. machine learning was used to learn that words kaunis, ihastuttava and hurmaava are in some way related. There is something very appealing in making machines learn language and interaction without explicitly giving them the rules. In the case of human interaction it is much more difficult because there is less data available and so many variables to take into account.

Presence. I can’t help but wonder why, apart from Microsoft and some car companies, no big companies were present. I’m sure this kind of research is also done in other companies. I asked a few people this question:

The other companies, is what they are doing (in the context of workshop topics) not novel, or are they just not telling about their research and not contributing back; and if so, do we care?

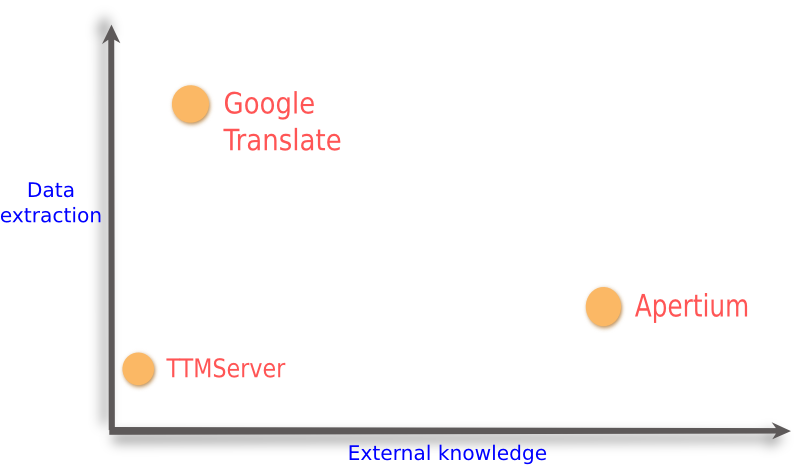

To summarize the answers and my conclusion: Apple Siri, for example, is not so novel, it is just a product done very well using a simple technology; on the other hand big companies have a vast amount of data not available for research. Essentially Google and other big companies have a monopoly on certain areas like machine translation and speech recognition. We do care about this.

Lab tour. After the workshop there was a lab tour in the Silicon Valley. We visited Honda Research Institute, Computer History Museum and Microsoft Research. Microsoft scores again for getting my attention by presenting a new version of Kinect. It was also nice to see a functioning adding machine at the Computer History Museum. And I can’t avoid mentioning having a nice spicy pasta lunch in the warm sunshine with a good company (in increasing order of significance) in Mountain View knowing that Google offices were very close.