Now that I’ve slept over the presentations I attended at FOSDEM, it’s a good time to think about what I heard and how it related to what I am doing. It is also a good time before I forget what I heard. I didn’t get to talk to that many people this year, mostly running from one talk to another.

There will be three parts to the series of these blog posts. I will start with i18n related topics and then other presentations roughly in the order I saw them (headers link to abstracts). There will also be a follow-up post on the gettext format detailing the good and bad sides from today’s point of view. Stay tuned!

Mozilla keeps pushing new i18n stuff, though the general feeling of this and other related talks is that they either have not defined what is the issue they are fixing, or they have defined in a way that is completely different from what we are working on.

While we are trying to make it as easy as possible for translators to translate (in a technical sense, they already have enough of complexity due to language itself), the ILE proposed in this talk is essentially a IDE (integrated development environment) – a glorified text editor that programmers often use for programming. It has features like highlighting syntax via colors and automatic completion for translation file syntax.

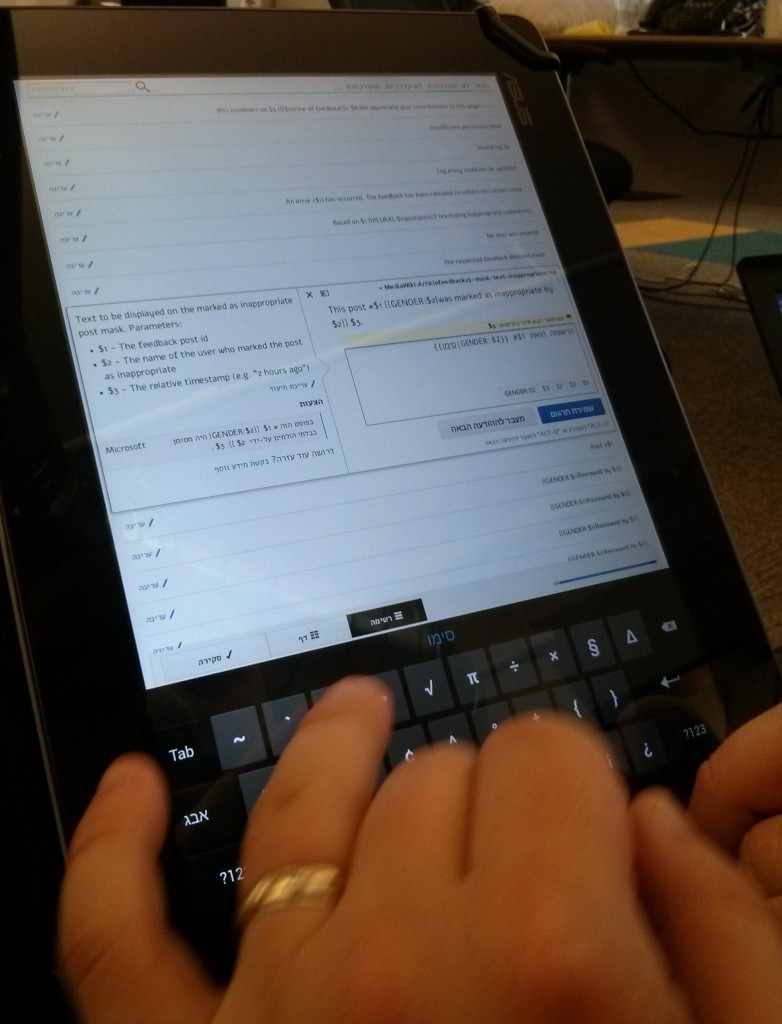

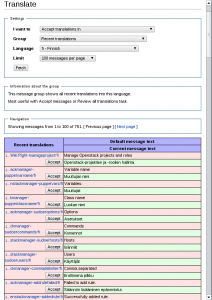

But do translators really care about particular syntax of translation in a file, or are they in fact more happy if they do not need to care about files and version control systems at all, while at the same time having access to aids like translation memories and change tracking in an interface created by UX designers, as we have in translatewiki.net?

“It helps to see the messages above and below to understand the context”

You can see the related messages close to each other in almost any translation tool, even though showing related messages next to each other is not a replacement for proper documentation of context for each message.

“I don’t see how form based translation tools would cope with more complex localisation file formats like L20n”

I don’t think the solution to facilitating proper localisation is to turn the localisation itself into programming. The cases where more complex logic is needed are actually relatively few and I think it is worthwhile to keep the common case as simple as possible while supporting also the more complex cases in a standardized, data driven way, like using the CLDR.

Mozilla keeps pushing new i18n stuff: who is the user they are designing new tools for?

This talk was an update to the similar presentation on L20n last year. What I said on the previous post about turning localisation into programming applies here too.

It is nice that you specify grammatical gender for things, but this format does not really solve the problem that many variables actually come from user input, for which we cannot specify this information.

It is nice that you can make custom plural rules, but in almost all cases the standard set of plural rules that comes from standards like CLDR is enough.

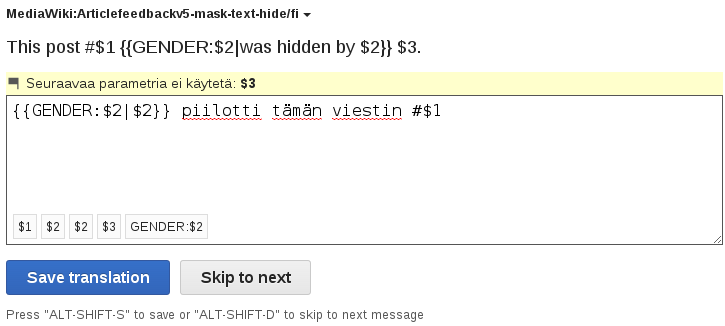

It is nice that you can mix gender and plural and even many plural in one message using nested hashes (arrays in PHP), but it is not nice at all that you have to translate the message N*M*O times as the number of variables increase. I firmly believe that inline syntax like {{GENDER:$1|he|she}} eats {{PLURAL:$2|apple|apples}} is superior in this regard.

If we strip the plural, gender, time formatting etc. support from L20n, we actually just get a complex file format for storing things, something which we already have many variants of. The aforementioned features are usually provided by the i18n library (or definitely should be; unfortunately this is not always the case) so what they have done is actually moving the complexity of language from i18n libraries and software developers to translators. Aiming at “keep common case simple, but support complex cases where needed”, I don’t think this is as presented a good trade-off between simplicity and flexibility.

This talk was about adapting some nice parts of L20n to .properties format. The result is somewhat more complex than plain .properties and not as flexible as L20n. Even having gender and plural in the same message is problematic in this format.

I’d like to highlight two ideas in webl10n. Sidenote: Why call it l10n when it is actually an i18n library for developers, similar to jquery.i18n.

The first idea is that you can have html like this:

<div l10n-data-id=retro>

<div>Please <a href="login/">log in</a></div>

</div>

And the translators see this:

retro = <div>Please <a>log in</a></div>

The translation, when displayed, is properly merged to the original html so that the classes and link targets are preserved. I don’t know what happens if the translation is outdated and the structure is changed, but I guess we just should not use outdated translations with this system. When escaping is handled properly, this is a very nice way to handle what we call lego messages, where the text of the link is in a separate message, because due to escaping we can’t have link and link text in the same message.

Another idea is that if you have HTML like this:

<input type="search" placeholder="Search messages" title="Message search box">

You can turn it to this.

<input type="search" data-l10n-id="searchbox">

And translators will see this (using .properties format here)

searchbox.placeholder=Search messages

searchbox.title=Message search box

This simplifies the html the developers need to write.

Finally, take a look also at Pau’s Design talks at FOSDEM 2013.