Oh boy time flies. Translatewiki.net turns six years next Saturday. This is the first time we celebrate its birthday. How did it happen?

It was 2005, my last year at upper secondary school when I set up a MediaWiki for myself to do some school work. I was 17, and in the fall of the same year I started studying at a university. Can you imagine how awkward it was to attend university under age of majority (18 years in Finland)? Anyway, I think the wiki was originally called Nukawiki, then Betawiki and finally translatewiki.net. The wiki has gone through many updates. It probably started with Mediawiki 1.4 which boasts in release notes that User interface language can be changed by the user. It’s also gone through many computers starting from my laptop and gradually to more powerful, more dedicated servers.

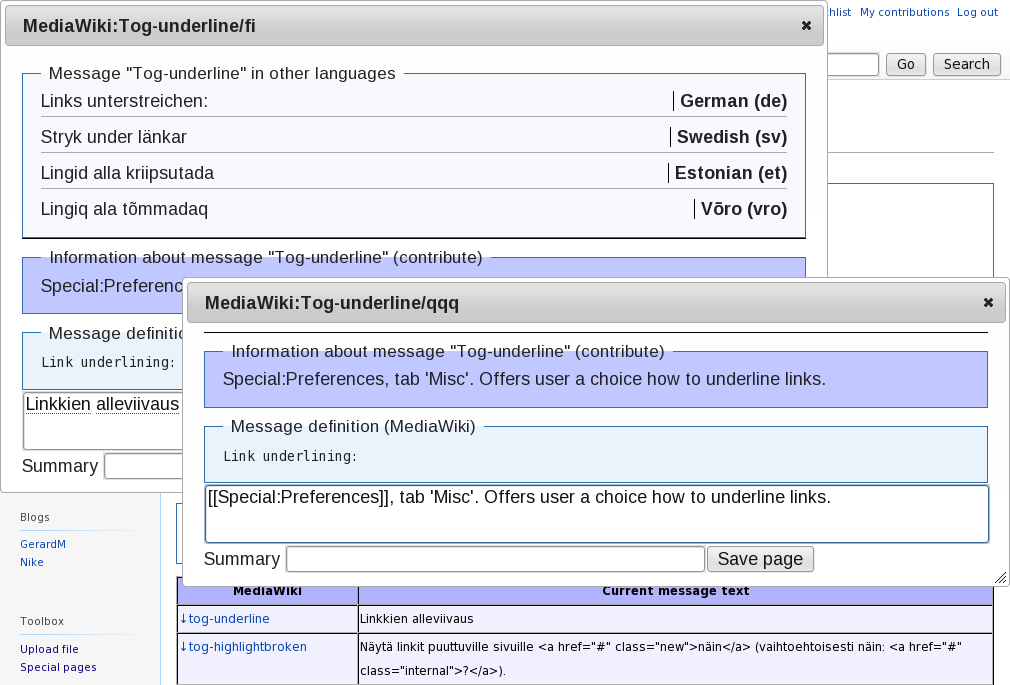

Already before the summer of 2006, when I started my obligatory military service which lasted six months, I was using the wiki to translate MediaWiki into Finnish and fix i18n problems. In 2006 we started inviting other translators to join. In February 2007 I started translating FreeCol into Finnish and soon they moved all translation related activities into our wiki. One of the initial translators was Siebrand, who has had enormous influence on the direction the project has taken since he joined.

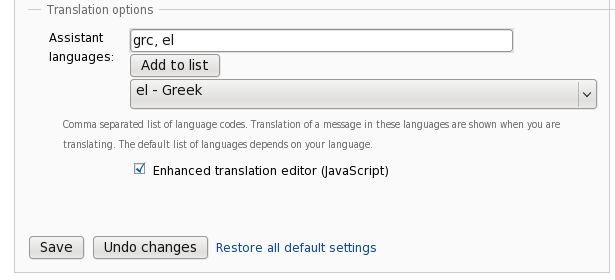

In other words translatewiki.net was a small hobby project for an entirely different purpose, then I used it to scratch a personal itch, and nowadays it is a thriving community with thousands of members. We are already huge in many metrics, we are still growing and there doesn’t seem to be any boundaries for our size. I just cannot imagine how many people the work of translatewiki.net has impacted. For me this means an opportunity, but more importantly a challenge. How do we improve our service while scaling up? How can we provide better tools for translators, for ourselves and for projects that use us? We have been successful thus far, because we have been very efficient – it is almost scary how few people (albeit very dedicated) can keep everything running smoothly.

Translatewiki.net has had and still does have huge impact to my life. It is just not because it is a huge time sink for me. It is a manifestation of the many skills I’ve learned during my life. It feels wrong to say that it is my hobby, because sometimes it feels that studying is the hobby here. Nevertheless my master thesis is nearing completion. I already have a job in mind and I can’t say that translatewiki.net didn’t affect that.

I’m sincerely grateful to each and everyone who has helped translatewiki.net to become what it is today.