Meetup of MediaWiki community. Or Wikimedia tech? How to call the Wikimedia software development ecosystem? (Photo by henna, copyright status unknown.)

This is the third post about FOSDEM 2013; see 1/3: I18n in the WEB, Mozilla i18n and L20n for the first and 2/3: docs, code and community health, stability for the second. Links to the abstracts in the headers.

Scaling PHP with HipHop

HipHop is still alive, and faster than ever. It has evolved from PHP to C translator to a JIT bytecode interpreter system, just like PHP itself is, without JIT of course. The speedups they are seeing are impressive (it was deployed on Facebook about a month ago). Given that they have removed the compile everything before deploy step, it is now much more feasible to use.

I’m considering to give HipHop a try on translatewiki.net later this year, probably after we have upgraded to at least Ubuntu 12.04, where Facebook provides packages for it. It is still a pain in the ass to set up manually, as it was few years ago. Wikimedia Foundation (WMF) has dropped its evaluation, but perhaps they will reconsider after our experiences, and HipHop, or hhvm as it is called now, has indeed changed a lot since then.

It was highlighted that the supported language features and libraries of hhvm and PHP vary to an extent. hhvm provides some nice features like strict type hinting, but it is unlikely I can use those anytime soon, since there is no way to take advantage of these on hhvm without breaking support for normal PHP, which is something that really cannot be done in the MediaWiki ecosystem.

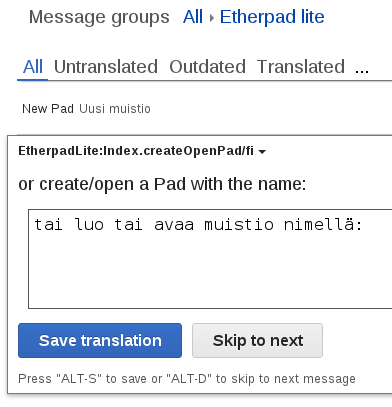

Community/BOF meetup

Almost 20 people were around, a few outside of WMF. Discussions circled around events like the Amsterdam Hackathon and MediaWiki groups. The most interesting part (to me) is how to call the Wikimedia software development ecosystem, so it can be marketed properly. Suggestions ranged from extending the meaning of MediaWiki to cover everything including mobile, gadgets and so on; using Wikipedia as it is the brand most well known; or creating a new Wikitech brand.

There are pros and cons to each of the above, but one thing is true: There is no name that can currently be used to refer to everything technical done around MediaWiki and Wikimedia that would also be understood by potential participants. Also, MediaWiki development is not perceived to be cool anymore, because it’s PHP. But it isn’t. MediaWiki development is also Redis, Varnish, puppet, git, Solr, HipHop, semantic, node.js, mobile, OpenStack, and more. Quim Gil will continue work on coming up with a brand. Curious visitors can also compare this to what KDE did recently when they expanded the meaning of KDE to be not only the desktop, but also the community and everything they do. The change process wasn’t painless for them, and wouldn’t be for us, but at the same time (IMHO) the change has been quite successful and beneficial to KDE.

Qt Project Update

Qt is doing well. Qt5 is evolution instead of revolution (what Qt4 was to Qt3). The contributors outside of Nokia (and nowadays Digia) have risen to about one third of all commits. They are using Gerrit like MediaWiki. But unlike MediaWiki they have explicit hierarchy, with maintainers who are responsible for keeping each subsystem in shape. It also means that there is always at least one person you can talk to, to get your patch reviewed, unlike in the MediaWiki community.

Their platform support is also nice: Linux, Windows and OSX are fully supported, while iOS, Android and BlackBerry OS are also working more or less.

Fixing public procurement

Forgive me if I use incorrect terms. In a nutshell there is a law in Finland (coming from the EU) that disallows governmental organizations to request software systems by referring to an exact producer. So for example a hypothetical example “We want Microsoft Office on all our work stations in X department” is illegal, while “We want an office tools suite that includes documents, presentations, …” is legal. Free software people in Finland did an analysis of how many times this law has been violated… quite many… and have been sending letters that ask them to read the rules and fix their procurement.

The talk continued with the observation that there is no entity to enforce this rule, and that it is difficult to get the companies put into disadvantage to sue. One side argued that fixing this particular issue harms doing wide scale education of people on this and other issues. Or when is it useful to sue instead of trying to educate? When are the lost opportunities bigger a harm than bad publicity and money spent on suing? Apparently Microsoft has sued successfully in Finland to gain lots of money without a big PR hit. Open source solutions are usually discarded because the exit costs of the previous system are placed on the new solution instead of the old vendor lock-in solution.

All in all, the target of this kind of work is to further open source use in governments by allowing free competition; they want to do it EU-wide.

The Keeper of Secrets: The Dance of Community Leadership

This is the first time I’ve seen Leslie Hawthorn speaking. In her talk, which was full of beer jokes (a bit too many to my taste), I caught some points:

- Don’t be a jerk.

- Stop gossiping and talk directly to people you have problems with.

- Don’t ignore difficult people, be brave enough to let them know your honest opinion.

- Don’t be a jerk while talking about difficult things with people, do it politely and cooperatively.

- Face to face meetings are essential to community building (my addition: also include possibilities do it in beerless, quiet places).